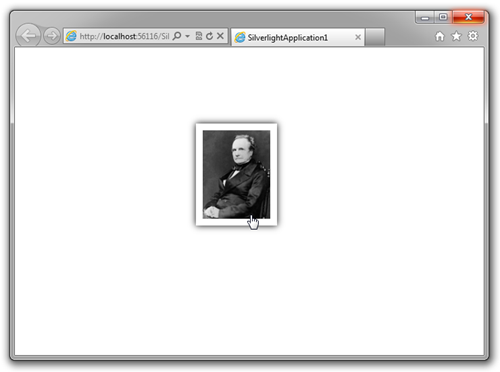

Today, end users expect (or demand) intuitive and interactive web applications. One component of this is the ability to manipulate object with a mouse. This blog post provides a three step walk through that describes how to create a draggable picture of Charles Babbage, the inventor of the first computer.

This walkthrough requires:

- Microsoft Visual Studio 2010 (link)

- Microsoft Visual Studio 2010 Service Pack 1 (link) – Optional

- Microsoft Silverlight 4 Tools for Visual Studio 2010 (link)

- Microsoft Expression Blend SDK for Silverlight 4 (link)

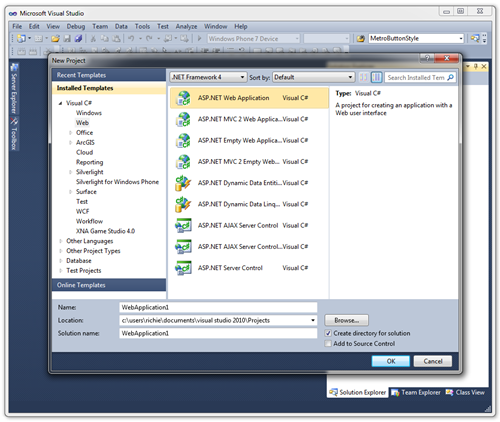

Step 1 – In VS2010, create a new Silverlight application called SilverlightApplication1

Click ok to create a host ASP.NET web application

Step 2 – Add blend interactivity behavior

Add the following assembly references:

- Microsoft.Expression.Interactions

c:\Program Files (x86)\Microsoft SDKs\Expression\Blend\Silverlight\v4.0\Libraries\Microsoft.Expression.Interactions.dll - System.Windows.Interactivity

c:\Program Files (x86)\Microsoft SDKs\Expression\Blend\Silverlight\v4.0\Libraries\System.Windows.Interactivity.dll

Step 3 – Add drag behavior to an image

Copy and paste the following code into MainPage.xaml. This will display an image of Charles Babbage in the center of the screen. The XAML to enable mouse dragging is highlighted in yellow below.

<UserControl

x:Class="SilverlightApplication1.MainPage"

xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

xmlns:i="http://schemas.microsoft.com/expression/2010/interactivity"

xmlns:ei="http://schemas.microsoft.com/expression/2010/interactions"

mc:Ignorable="d"

d:DesignHeight="600"

d:DesignWidth="800"

>

<Grid x:Name="LayoutRoot" Background="White">

<Image

Width="100"

Source="http://upload.wikimedia.org/wikipedia/commons/6/6b/Charles_Babbage_-_1860.jpg"

>

<i:Interaction.Behaviors>

<ei:MouseDragElementBehavior/>

</i:Interaction.Behaviors>

</Image>

</Grid>

</UserControl>

You are done! Press F5 to start the project is debug mode. Using your mouse, the picture of Charles Babbage can dragged around the screen.

This works fine but with a little extra work we can make the application a little more intuitive. By adding a frame, drop shadow and associating a hand cursor, the picture now has a visual queue inviting users to interact.

<UserControl

x:Class="SilverlightApplication1.MainPage"

xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

xmlns:i="http://schemas.microsoft.com/expression/2010/interactivity"

xmlns:ei="http://schemas.microsoft.com/expression/2010/interactions"

mc:Ignorable="d"

d:DesignHeight="600"

d:DesignWidth="800"

>

<Grid x:Name="LayoutRoot" Background="White">

<Grid>

<Grid Background="White" Cursor="Hand" HorizontalAlignment="Center"

VerticalAlignment="Center">

<i:Interaction.Behaviors>

<ei:MouseDragElementBehavior/>

</i:Interaction.Behaviors>

<Grid Background="Black">

<Grid.Effect>

<BlurEffect Radius="15"/>

</Grid.Effect>

</Grid>

<Grid Background="White"/>

<Image

Margin="10"

Width="100"

Source="http://upload.wikimedia.org/wikipedia/commons/6/6b/Charles_Babbage_-_1860.jpg"

/>

</Grid>

</Grid>

</Grid>

</UserControl>