Yesterday, Esri’s Applications Prototype Lab released a sample for ArcGlobe that allows users to navigate in three dimensions using a Kinect sensor and simple hand gestures.

This post describes a sample utility library developed in conjunction with the ArcGlobe add-in called KinectControl. KinectControl is a WPF user control that can display raw kinect feeds but most importantly provide developers with the orientation, inclination and extension of both arms relative to the sensor. KinectControl was developed a generic library that can used to kinectize any application.

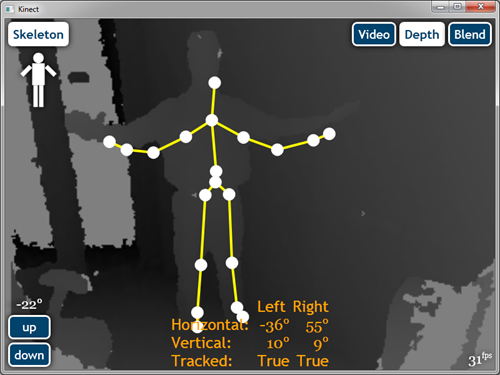

The following few screenshots demonstrate the capabilities of the KinectControl. By default, KinectControl displays the sensor’s video feed and the skeleton of the closest person to the sensor. The orange text at the bottom of the app is debug information from the test application.

Occasionally a limb may appear red, this indicates that one or more of the limb’s joints cannot be “tracked” and its position is “inferred” or approximated by the sensor. This often happens when a joint is obscured from view, for example, if a user is pointing their hand and arm directly towards the sensor, the user’s shoulder cannot be seen by the sensor.

On the upper right hand corner of the KinectControl are three button that allow the user to toggle between three different views. The video and depth views are self explanatory but the third, blend, is a combination of the both.

The blend view color codes each and every person identified by the kinect sensor with a different color as shown below. The kinect sensor can identify up to seven people.

This white stick figure graphic in the upper left hand corner is used to alert the user whenever he or she has moved beyond the kinect’s field of view. For example, in the screenshot below, the user has moved too far to their left.

In the bottom left hand corner are two buttons to control the inclination of the sensor. Each button click will move the sensor one degree up or down.

And lastly, the test app that is included with the sample uses binding to display the left and right arm orientation and inclination on the screen.

Please click the link below to download the KinectControl sample. To use this sample you must have a Kinect connected to Windows 7 computer with Kinect for Windows SDK installed.